AI’s Hidden Cost: The Erosion of Critical Thinking

Increased use of AI erodes critical thinking. How can we remain thoughtful users of AI?

Since the launch of ChatGPT in late 2022, the rise of generative AI has been nothing short of meteoric. Adoption of GenAI has been faster than virtually any tool in recent memory, and hundreds of millions of people now use generative AI chatbots to augment a wide array of tasks traditionally thought to be the sole domain of humans.

Unsurprisingly, this has caused widespread concern about the impact AI will have on the future of work. While most discussions on this topic emphasize the potential for job displacement, there is another – and potentially more pernicious – impact that has gone less noticed: the erosion of critical thinking at the hands of AI.

AI’s Impact on Critical Thinking

To leverage generative AI tools effectively, we must critically evaluate their outputs. Despite impressive advancements, AI is still prone to frequent mistakes, which users must learn to detect. The prevalence of AI hallucinations is well documented, and a recent analysis of AI chatbots found that they cite news articles incorrectly 60% of the time – an embarrassingly high rate. This spells trouble for users who blindly accept what AI generates.

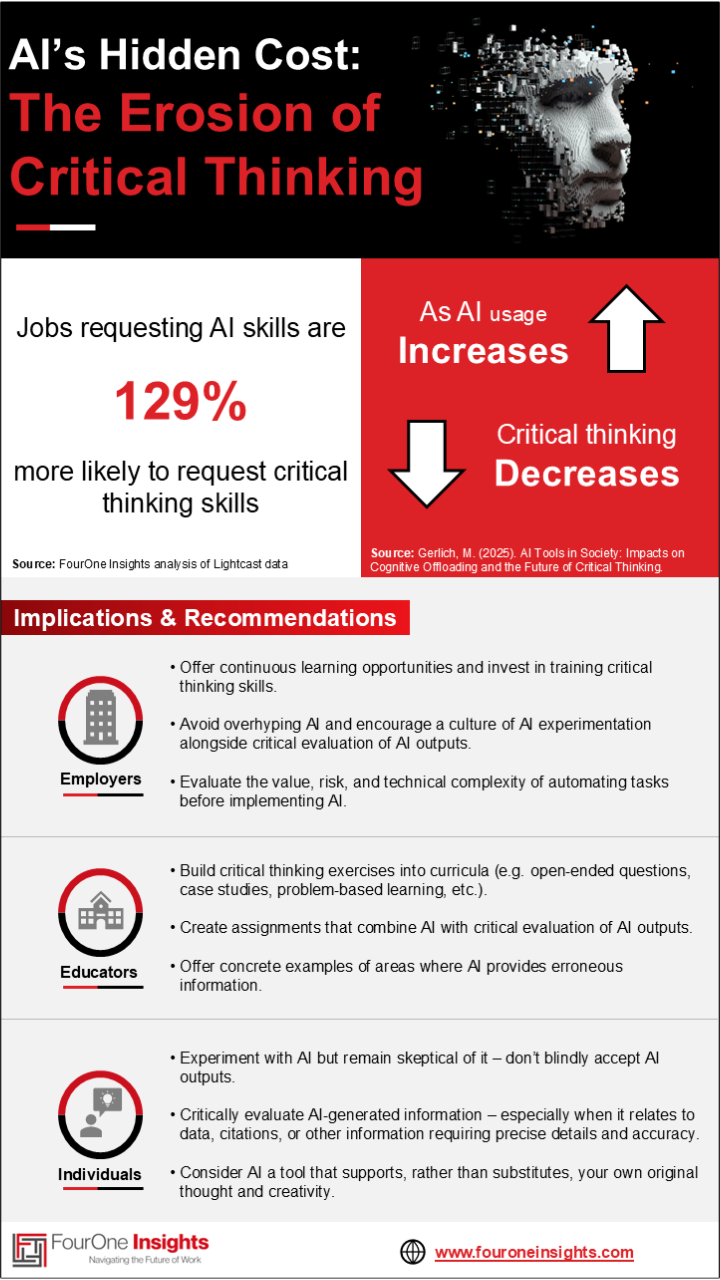

The need for workers to thoughtfully review AI-generated information is not lost on employers, and many organizations emphasize the need for employees to have robust critical thinking skills when using AI. Our analysis shows that job postings requesting AI skills are 129% more likely to also request critical thinking skills compared to other job postings, underscoring just how much employers value critical thinking among employees who use AI.

However, as employers highlight a need for strong critical thinking skills when using AI, new research suggests that AI itself is pushing our critical thinking abilities in the wrong direction. A recent study revealed that increased use of generative AI is associated with decreased critical thinking abilities – a troubling finding, given how important it is to critically evaluate AI-generated information.

As we offload more cognitive tasks to AI, our cognitive abilities are at risk of atrophying, making it harder for us to thoughtfully interrogate the outputs we receive from AI. This means that the more we use AI, the more likely we are to miss AI-generated mistakes. Said another way, this is akin to becoming a worse driver the more someone gets behind the wheel.

Reclaiming Our Ability to Think Critically

So far, this evidence portends a looming critical thinking crisis that threatens to exacerbate the worst consequences of AI, while muting its potential benefits. However, the same study that documents the negative impact of AI on critical thinking also provides a glimmer of hope. Individuals with higher education levels were found to retain higher levels of critical thinking, even when they use AI more frequently. This suggests that ongoing education and training will be critical as AI adoption increases.

To hedge against AI’s tendency to reduce critical thinking, research such as the study referenced above suggests there are concrete steps employers, educators, and individuals may take. Some of these include the following (and are summarized in the infographic below):

Employers: Offer continuous learning opportunities to your workers, but don’t just think about reskilling in terms of technical skills. Consider training that helps workers develop their critical thinking skills and encourage workers to critically evaluate AI outputs. Avoid overhyping AI and create a culture that encourages AI experimentation alongside a realistic assessment of its shortcomings. Before automating a task, evaluate the value, risk, and technical complexity of doing so.

Educators: Infuse curricula with ample opportunities to build critical thinking skills – such as open-ended questions, case studies, problem-based learning, and other techniques – especially as you integrate AI into your courses. Provide assignments and projects that combine AI with critical evaluation of its outputs and offer concrete examples of areas where AI is most likely to stumble.

Individuals: Develop a healthy dose of skepticism towards anything that AI generates and always double check AI outputs – especially when they relate to data, citations, or other information where details and accuracy are paramount. Remember to view AI as a tool that supports, rather than substitutes, your own original thought and creativity.

Infographic: AI’s Hidden Cost

Download the infographic here.

The New Value of Old Skills

Some of these suggestions may sound old school, and that’s precisely the point. Training individuals in critical thinking and other “human” skills is nothing new, but even as AI and other digital technologies become increasingly commonplace, the value of legacy foundational skills endures – and, in many cases, is amplified.

AI is quickly becoming a table stakes technology, and the organizations and individuals who separate themselves from the pack won’t simply leverage AI – they will leverage it more effectively than their peers. This will come down to asking AI the right questions, applying AI to the right problems, and ensuring AI gives the right answers. These are the hallmarks of good, old-fashioned critical thinking, which highlights one of the great paradoxes of AI: the more advanced this new technology gets, the more valuable our oldest human skills become. Let’s hope those are skills we never lose.